Over the last few decades, a lot of changes happened in the world of display technologies. We have seen CRTs getting replaced with LCDs, and then LCDs got replaced with slim LED displays. Joining this race is the HDR, an element that is considered the future of innovations in displays.

But what is HDR? What is HDR+? What is the difference between HDR10 vs. HDR10+? We will get to know about all those terminologies in this article.

What Is HDR ( High Dynamic Range)?

HRD means High Dynamic Range. To understand HDR, we first need to understand what is DR or dynamic range.

Dynamic Range is the difference between the brightest spot on a screen to the darkest spot. HDR goes one step extra and increases this difference. That is what we call High Dynamic Range or HDR.

So HDR is all about increasing the brightness, increasing the contrast, and increasing the details to look closer to any real-life image. Hence, your eyes can perceive darker blacks and brighter whites.

Displays with HDR technology usually capture multiple shots under different exposures, and then the processors come up with an image that shows the brightest colors and the darkest colors.

That is why you will notice that when you take a picture with HDR enabled, it takes some time to show you the captured image because, at the back end, images were getting processed.

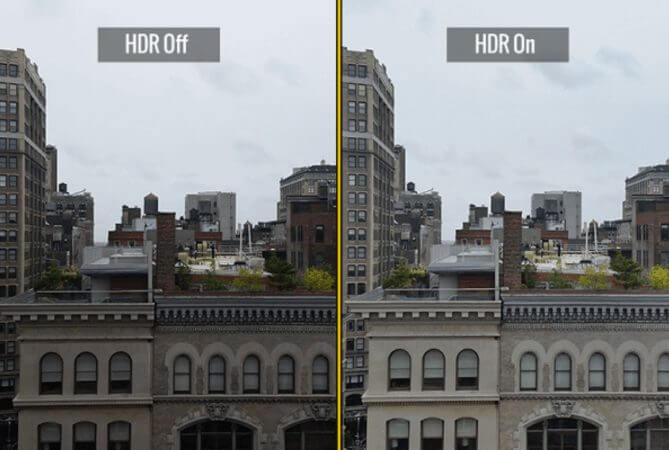

Let us take an example to show you the concept of HDR.

In the above example, you will see the left-side image does not show the true color. The white is not pure white, and the black is not pure black.

But on the right side image, you will notice better color reproduction, and the image is more or less like a true image. That is the power of HDR.

Most of our old-generation monitors or TVs use SDR technology that does not show true colors, and the brightness is in the range of 300-500 Nits.

Displays with HDR have brightness in the range of 800 Nits to 1500 Nits or even more than that.

Brightness is measured in NITS. One NIT of brightness is equal to the amount of brightness that a candle offers.

There are different types of HDR standards available in the market, and we will talk about all those standards in detail in this article.

- HDR10

- HDR10+

- Dolby Vision

- HLG

HDR10

If a display supports HDR, it supports HDR10. HDR10 is the bare minimum standard of HDR technology. If the display has HDR10, the brightness can vary from 1000 NITS to 4000 NITS with a 10-bit color depth.

HDR10 is also known as static HDR as the HDR10 sends static metadata to the video stream. Encoded information on color calibration settings is required to make a picture look real.

The best part about HDR10 is that most smart TVs support it, and most of the contents are HDR10 compatible.

HDR10 is almost twice as bright as SDR. So if you are moving from SDR to HDR, you will see a huge difference in picture quality and color reproduction.

HDR10+

HDR10+ works similarly to HDR10, but it sends dynamic metadata that allows displays to control color and brightness frame by frame. That allows displays to deliver a more realistic picture.

The display brightness is between 1000 NITS to 4000 NITS with a resolution that can go all the way up to 8K. It supports a 10-bit color depth like HDR10.

Most of the OTT platforms like Netflix, Amazon Prime Video, and Hulu support HDR10+.

Dolby Vision

Dolby Vision is another type of HDR technology developed by Dolby Labs, the same company that developed Dolby Audio and Dolby Atmos.

Dolby Vision is similar to HDR10+ because it sends dynamic metadata to control each frame of the video to control its brightness and contrast, thus providing a more realistic image. Still, the difference is that it supports 12-bit colors (68 billion colors).

The brightness can go up to 10000 NITS which is 10 times more brightness than the HDR format.

Companies that want to adopt Dolby Vision have to pay a royalty fee to Dolby labs as it is a proprietary standard, unlike HDR1010+ which is a royalty-free HDR standard.

Hybrid Log Gamma

Hybrid log gamma ( HLG) is a new HDR standard developed by BBC ( British Broadcasting Corporation and NHK ( Japan broadcasting corporation).

The salient feature of HLG is that it is backward compatible. For example, if you have an HDR-enabled TV, you can watch HLG content in that high HDR standard, but if you have that old-school SDR TV, you can still consume HLG content and get downscales to SDR level.

HLG encodes both the HDR and SDR information into a single signal. So it is very efficient and cost-effective for the broadcaster as they don’t have to send two different signals for HDR and SDR. That also reduces the bandwidth required to grab those signals.

Like HDR, HLG only supports up to 1000 nits with a 10-bit color depth. HLG is a dynamic HDR standard, which means the color and brightness are controlled frame by frame.

The main issue with HLG is that there are not many contents available right now, and there are no TVs in the market that support it, but slowly it may get momentum.

Terminologies Used In HDR Standard

Now we know what exactly is HDR, but we may still have some confusion about different terms used in HDR like color depth, resolution, and tone mapping. So we will also learn about those here.

Color Depth

More color means a more vivid picture, a more realistic image. Right? For example, some images or videos show black and gray as two different colors, but both black and gray look almost the same in many cases. That is why displays with more color depth show much better picture quality.

In HDR, there are two different color depth levels. One is 10-bit colors, and the other is 12-bit colors. 10-bit colors support up to 1 billion possible colors, whereas 12-bit color supports up to 68 billion colors.

Peak Brightness Level

The brightness of displays is measured in cd/m2 or nits. One NIT is equal to the amount of brightness that a candle can offer.

HDR standard offers brightness from 1000 nits to up to 10000 nits in the case of Dolby Vision. More brightness does not mean a better picture.

When more brightness accompanies more contrast and more colors, it gives a better picture quality.

Tone Mapping

What if you have a 4000 nits signal, but your TV supports only up to 1000 nits? If you have a traditional TV, it may not show anything as your TV won’t support that signal.

Most of the new generation TVs come with tone mapping features. What it does is that it converts the 4000 nits signal to a 1000 nits signal and shows that to you.

It won’t give an error that the signal is not supported. The 4000 nits or 1000 nits are just an example, but modern TVs can downscale a suitable signal for it to display.

Difference Between HDR, HDR10, HDR10+, Dolby Vision And HLG

Here are the key differences between HDR, HDR10, HDR10+, Dolby Vision, and HLG HDR formats.

| Attributes | Dolby Vision | HDR10 | HDR10+ | HLG |

|---|---|---|---|---|

| Peak Brightness | 10K Nits | 1K-4K Nits | 1K-4K Nits | 1K Nits |

| Color Depth | 12 Bit | 10 Bit | 10 Bit | 10 Bit |

| Resolution | 8K | 4K | 8K | – |

| HDR Type | Dynamic | Static | Dynamic | Dynamic |

| Availability | Good | Great | Good | Limited |

| Backward Compatibility | No | No | No | Yes |

| Cost | More | Less | More | Less |

Conclusion

I hope you got a fair idea about different HDR formats. This may help you to make a better purchase decision next time you buy a display like a TV, monitor, or projector.

Displays with HDR are widespread, and even nowadays, smartphones are coming with HDR. But displays with high HDR standards like HDR10+ or Dolby Vision are still a costly affair. So it would be best if you decide based on your requirements.

If you have questions or queries, please write in the comment section, and I will be happy to assist.